Apple has introduced an automatic photo analysis feature, Enhanced Visual Search, sparking debates over user consent and privacy. The technology, designed to identify landmarks in photos without explicit user permission, uses advanced privacy-preserving techniques but has raised questions about the balance between technological innovation and user rights.

Understanding Enhanced Visual Search

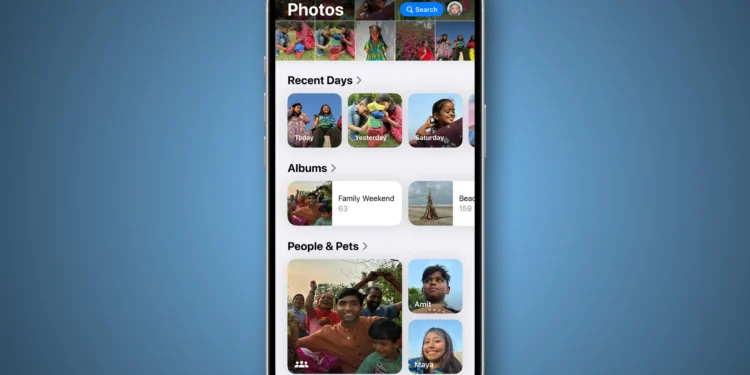

Enhanced Visual Search, as Apple describes, leverages homomorphic encryption and differential privacy to analyze photos stored on iOS and macOS devices. This technology allows iPhones and Macs to search photos using landmarks or points of interest by matching images to a global index maintained by Apple. The process is designed to be private, preventing Apple from accessing the actual content of the photos.

Jeff Johnson, a software developer, highlighted the lack of communication from Apple regarding the deployment of this feature, which was believed to have been rolled out with iOS 18.1 and macOS 15.1 on October 28, 2024. Despite Apple’s claims of privacy preservation, the automatic opt-in feature has not been well-received by the community.

Technical Breakdown and Privacy Implications

The local machine-learning model on Apple devices identifies potential landmarks in photos and creates a vector embedding—a numerical representation of that image section. This embedding is then encrypted using homomorphic techniques, allowing computations to be performed on the encrypted data without revealing its contents.

This setup ensures that the data remains encrypted throughout the process, from the device to Apple’s servers, where further encrypted computations help determine if the image matches any known landmarks. The result is sent back to the device, still encrypted, for final decryption and presentation to the user.

However, the concern lies not in the technical capabilities of the feature but in its default activation and the implications for user consent. Michael Tsai, a software developer, points out that the approach is less private than Apple’s discontinued CSAM scanning plan, as it affects all photos, not just those uploaded to iCloud.

Community Response and Ongoing Concerns

The community response has been mixed, with significant concerns about the lack of opt-in consent. Matthew Green, an associate professor at the Johns Hopkins Information Security Institute, expressed frustration over discovering the service’s activation just days before the New Year without prior notification.

The broader issue reflects a growing discomfort with how companies manage user data and privacy. While Apple ensures that the data is disassociated from user accounts and IP addresses, the unilateral decision to activate such a feature has not sat well with many users and experts.

Apple’s Silence and the Need for Transparency

Apple’s silence in response to inquiries about Enhanced Visual Search only adds to the frustration. The lack of communication from the tech giant about significant privacy-related features goes against the community’s desire for transparency and informed consent. As we move forward, companies like Apple must balance innovation with user privacy and autonomy. Ensuring that users are informed and have control over such features is essential in maintaining trust and respecting individual privacy rights. The debate over Enhanced Visual Search clearly indicates the broader conversations we need to have about privacy in the digital age.