The debate surrounding AI and its potential for creativity has intensified, with many experts, including Professor Nicholas Creel, weighing in on whether artificial intelligence models “learn” like humans. But as AI continues to evolve, a deeper issue is emerging that goes beyond intellectual discussions — AI companies may be committing mass theft under the guise of “training.” This growing trend is raising serious questions about copyright, creativity, and the future of human artistry.

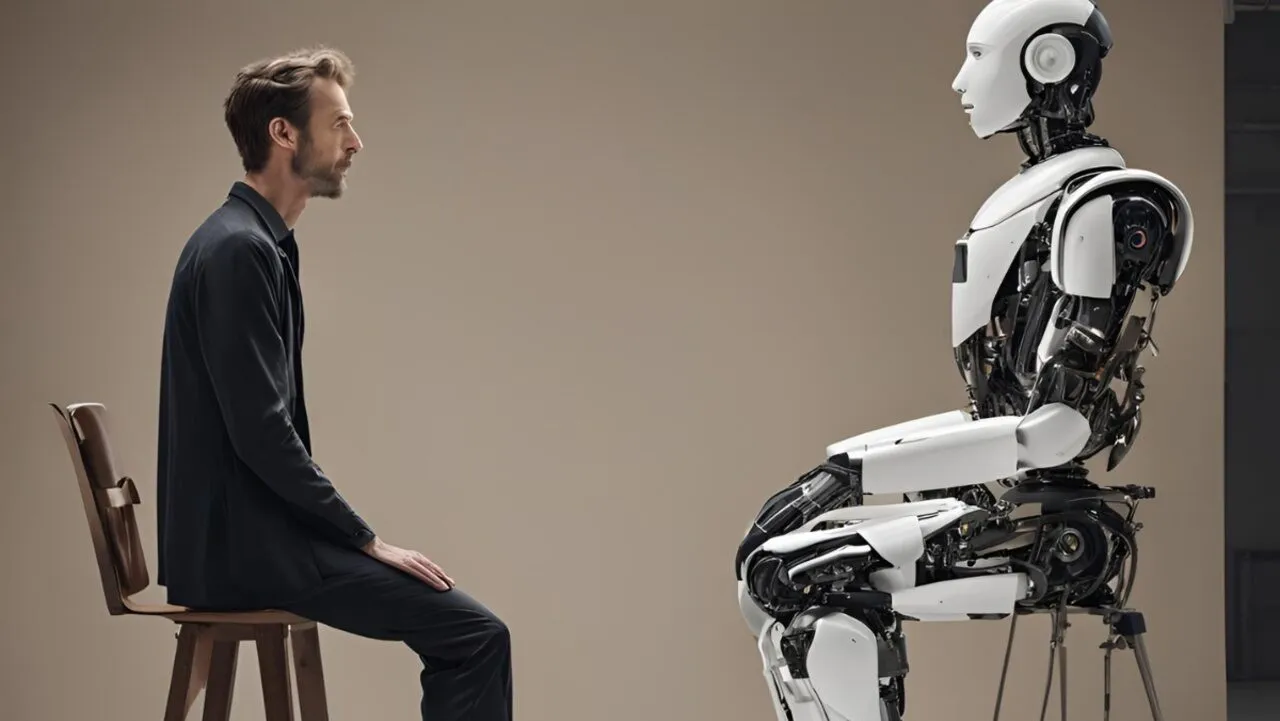

AI: Not the Same as Human Learning

Ursula K. LeGuin once wrote, “There is no right answer to the wrong question,” a sentiment that resonates deeply with the ongoing debate around AI. While AI models like Microsoft’s Copilot are capable of processing vast datasets, they don’t learn or reason the way humans do. As detailed in Erik J. Larson’s book The Myth of Artificial Intelligence, AI lacks the capacity to handle novelty or uncertainty. It can’t extrapolate new truths from sparse data, nor does it have empathy or genuine understanding.

When asked, “Does AI reason in the same way humans reason?” Microsoft’s Copilot responded:

“AI relies on large datasets and algorithms to make decisions and predictions. It processes information based on patterns and statistical analysis. AI follows predefined rules and models to arrive at conclusions. It doesn’t have intuition or emotions influencing its decisions. AI can learn from data through techniques like machine learning, but this learning is based on mathematical models and not personal experiences.”

This response underscores the stark differences between AI and human cognition. While AI can generate responses by processing patterns, it lacks the depth of human experience and creativity that arises from real-world engagement.

The Cost of AI’s “Training” on Copyrighted Works

For those in creative fields, like musicians, the impact of AI is being felt more directly. As Moiya Mc Tier from the Human Artistry Campaign explains, real creativity is far more than just pattern recognition — it’s about lived experiences, culture, and personal growth. And yet, AI companies continue to scrape data from the internet, including copyrighted works, and feed them into AI models without permission or compensation.

This raises a critical question: Shouldn’t AI companies be required to license the content they use to “train” their models?

When AI companies train their algorithms, they inevitably reproduce copyrighted works or produce derivative works. By distributing these AI-generated pieces across a vast network, these companies are infringing on exclusive rights reserved to authors under federal law. Normally, a company would seek permission to use these works and compensate the creators. However, AI companies have largely chosen to skip this step, setting the value of these copyrighted works at zero. This is a blatant devaluation of the world’s creative legacy.

The Dangers of a Derivative and Diminished Culture

The most immediate consequence of AI’s unchecked use of copyrighted works is the potential destruction of true creativity. By relying on data scraped from existing works, AI models are not capable of producing anything truly novel or groundbreaking. Instead, they generate derivatives — reassemblies of previously existing content — which, while impressive in their own right, cannot replace the spark of originality that comes from human experience and insight.

Without proper regulation, this could result in a culture that is dull and derivative, unable to push boundaries or innovate in meaningful ways. The next generation of artists, musicians, and creators may find themselves stifled by a landscape dominated by AI-generated works that mimic but never surpass human ingenuity.

AI Evangelists and the Masking of Mass Infringement

AI evangelists often paint a rosy picture of artificial intelligence, anthropomorphizing AI systems and presenting them as cute, learning entities. By reframing art as mere “data” and mass copying as “training,” they downplay the severe ethical and legal issues at play. This narrative is a deliberate attempt to mask the reality — that a powerful cartel of trillion-dollar companies and deep-pocketed tech investors are committing or excusing mass copyright infringement.

While AI may not yet have the capability to understand the implications of such actions, humans see the issue clearly. This is not about creating intelligent, empathetic systems; it’s about circumventing laws and exploiting the work of human creators without their consent.

A Call for Action: Protecting Creativity in the Age of AI

As the debate around AI and creativity rages on, it’s clear that the real question we should be asking isn’t whether AI learns the same way humans do, but rather what AI companies are doing with the works of human creators. Without proper protections, AI will continue to strip away the value of creativity and contribute to a culture of imitation rather than innovation.

David Lowery, a mathematician, musician, and writer, has been an outspoken advocate for the protection of creative works in the age of AI. As a member of the bands Cracker and Camper Van Beethoven, Lowery understands firsthand the damage that mass AI “training” can do to the artistic landscape. His call to action is clear: We must take steps to protect intellectual property and ensure that AI does not become a tool for mass copyright theft. The future of creativity depends on it.