OpenAI, a leader in the artificial intelligence landscape, is pushing the boundaries of technology by funding groundbreaking research into AI morality. In a significant move, OpenAI Inc., its nonprofit arm, has revealed plans to explore whether AI can predict human moral judgments. Through a grant awarded to Duke University researchers, the initiative aims to delve into the possibility of designing AI systems capable of making ethical decisions.

With a $1 million, three-year grant, OpenAI hopes to support academic exploration into “making moral AI.” The initiative’s principal investigator, Walter Sinnott-Armstrong, a practical ethics professor at Duke, and his co-researcher, Jana Borg, are no strangers to the field. Together, they’ve authored studies and even a book on the potential of AI as a “moral GPS” for human decision-making. While the details of their current project remain under wraps, the implications of their work could redefine how we integrate AI into sensitive fields such as medicine, law, and business.

Can Machines Truly Grasp Morality?

The goal of OpenAI’s funding is ambitious: to train algorithms to predict human moral judgments in complex scenarios. According to OpenAI’s press release, these scenarios might involve conflicts between competing moral factors, such as who should receive life-saving medical treatment or how to allocate limited resources fairly.

However, the journey to morally intelligent AI is fraught with challenges. Morality is a deeply nuanced and subjective concept that has eluded consensus even among philosophers for millennia. Can a machine, built on mathematical algorithms, understand the depth of human emotion, reasoning, and cultural variation that defines morality?

The nonprofit Allen Institute for AI faced similar hurdles in 2021 with its tool, Ask Delphi. While Delphi could tackle simple ethical dilemmas—such as recognizing cheating on an exam as wrong—it stumbled when questions were rephrased or posed in unconventional ways. The results revealed inherent biases and a lack of nuanced moral understanding. Delphi’s failings are a stark reminder of how far the field has to go.

The Mechanisms Behind Moral AI

Modern AI systems, including OpenAI’s own models, operate on machine learning principles. These systems are trained on vast datasets sourced from across the web, learning patterns to make predictions. For instance, an AI might infer from training data that the phrase “to whom” often precedes “it may concern.” However, morality isn’t a predictable pattern; it is shaped by reasoning, emotions, and cultural norms.

AI systems, by design, reflect the biases of their training data. This can lead to skewed moral judgments, particularly when the datasets are dominated by Western-centric viewpoints. For example, Delphi once deemed heterosexuality more “morally acceptable” than homosexuality—an outcome reflecting the biases baked into its training set.

For OpenAI’s efforts to succeed, their algorithms must account for the diverse perspectives and values of global populations. However, with morality varying widely across cultures and individuals, achieving true inclusivity remains a monumental task.

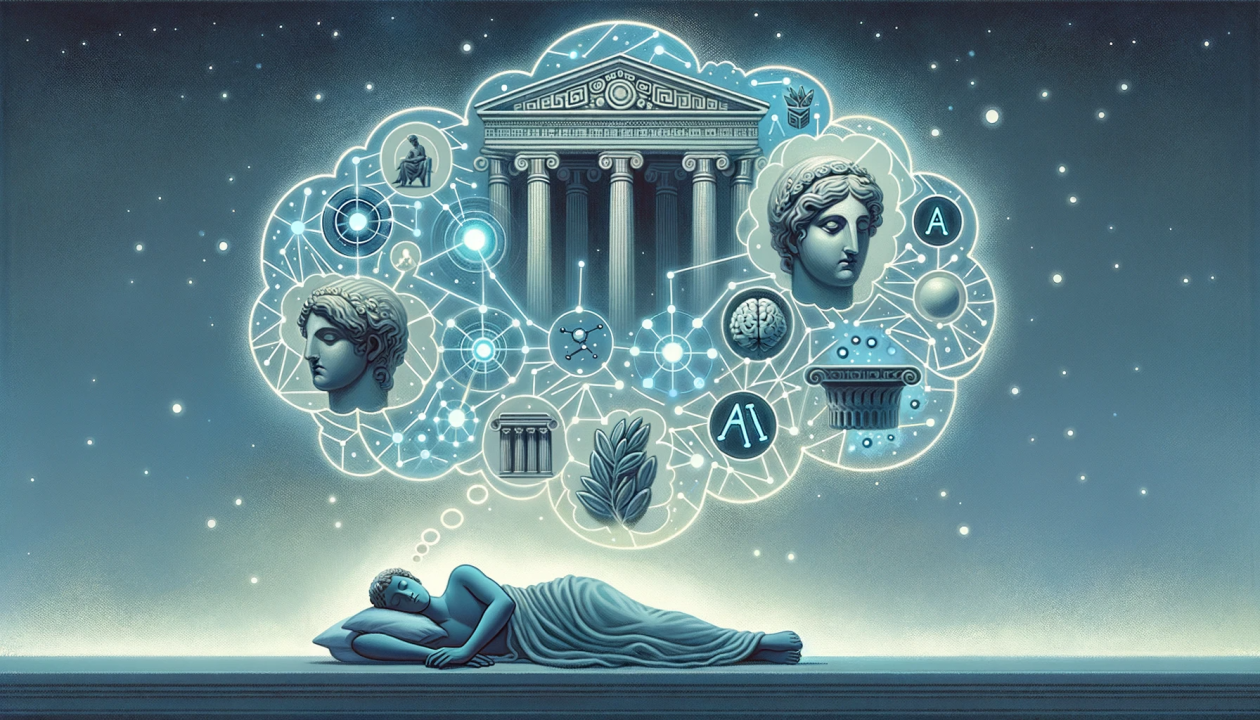

Philosophical Dilemmas in AI Morality

Even the world’s most advanced AI systems, such as ChatGPT, grapple with philosophical nuances. Some lean towards utilitarian principles, prioritizing outcomes that benefit the most people. Others align with Kantian ethics, emphasizing strict adherence to moral rules. Neither approach is universally accepted, and the “right” answer often depends on personal beliefs.

Walter Sinnott-Armstrong and Jana Borg’s prior work sheds light on how these philosophical debates might play into AI development. One study they co-authored examined public preferences for AI decision-making in morally charged scenarios, such as determining the recipients of kidney donations. Their findings highlight the complexity of creating algorithms that align with human values.

Why OpenAI’s Morality Research Matters

Despite the challenges, OpenAI’s initiative could unlock new possibilities for AI in fields that demand ethical precision. Imagine a healthcare AI capable of navigating moral dilemmas in patient care or a legal AI that ensures unbiased judicial decisions. The potential to enhance human decision-making is immense, but so are the risks of unintended consequences.

As OpenAI funds this ambitious project, the world watches with cautious optimism. The outcome of this research could shape the future of AI ethics, influencing everything from regulatory frameworks to public trust in AI technologies.

What Lies Ahead for Moral AI?

While the details of OpenAI’s project with Duke University remain confidential, the timeline is set—2025 will be a defining year. By then, the research could provide key insights into the feasibility of moral AI or underscore the limitations of current technology.

As humanity ventures into the unknown with AI morality, one thing is clear: the intersection of technology and ethics is no longer theoretical. It’s here, and OpenAI is leading the charge to explore its boundaries.