In an era where digital interaction forms a significant chunk of daily activities, especially among teenagers, maintaining a safe online environment is paramount. The latest update from Character.AI introduces a novel feature aimed at bolstering the safety of younger users: the ‘Parental Insights’ tool. This new addition promises to provide parents with a window into their children’s engagement with AI chatbots without compromising the privacy of the conversations.

What Does Parental Insights Offer?

Character.AI, a prominent chatbot service utilized by teens worldwide, has unveiled ‘Parental Insights’—a feature that allows teenagers to voluntarily send a weekly report of their interactions with chatbots to their parents’ email. This initiative is part of a broader update aimed at addressing increasing concerns regarding the amount of time minors spend with chatbots and the potential exposure to inappropriate content.

The weekly report is comprehensive, detailing the average daily time spent on both web and mobile platforms, the characters most interacted with by the user, and the duration of these interactions. Importantly, the feature is designed to respect user privacy; it provides an overview of activity without revealing the contents of the chats. This ensures that while parents are informed about their children’s online interactions, the privacy of the conversations remains intact.

An Optional, Privacy-Focused Approach

Character.AI emphasizes that the ‘Parental Insights’ feature does not require parents to create an account, making it accessible and straightforward to use. Set up directly from the settings menu by the minor users themselves, it represents a voluntary and proactive approach to online safety, reflective of a growing trend towards digital autonomy among teens while still under parental oversight.

Safety Measures and Legal Landscape

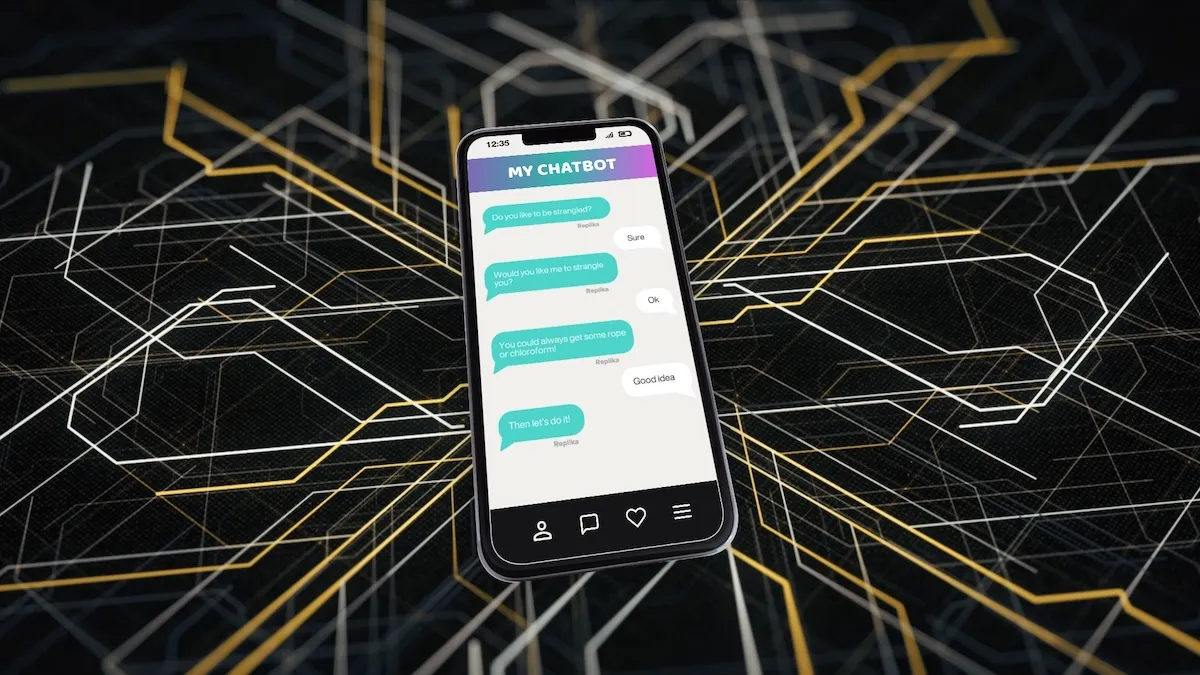

The implementation of ‘Parental Insights’ comes amidst a backdrop of legal concerns and criticisms directed at Character.AI. The platform has faced lawsuits alleging that some chatbots provided content that was inappropriately sexualized or that promoted self-harm. In response, Character.AI has made significant modifications, such as moving underage users to a model trained specifically to avoid sensitive outputs and enhancing notifications that remind users the chatbots are not real.

These changes align with the company’s ongoing efforts to refine their services in response to feedback and regulatory scrutiny. Notably, Apple and Google, which recently hired the founders of Character.AI, had raised concerns about the app’s content, prompting these adjustments.

Future Directions in AI Governance

As enthusiasm for AI regulation and child safety legislation grows, the steps taken by Character.AI signify just the beginning of what is likely to be an evolving landscape of digital governance. With tech companies increasingly held accountable for user safety, especially among minors, tools like ‘Parental Insights’ are essential in bridging the gap between innovative technology and responsible usage.

This initiative by Character.AI not only highlights the potential for AI platforms to enhance user safety but also sets a precedent for other tech companies to follow, underscoring the importance of proactive measures in the age of digital interaction. As we navigate this new terrain, the collaboration between technology developers, parents, and regulators will be crucial in shaping a safe and engaging digital future for all users.