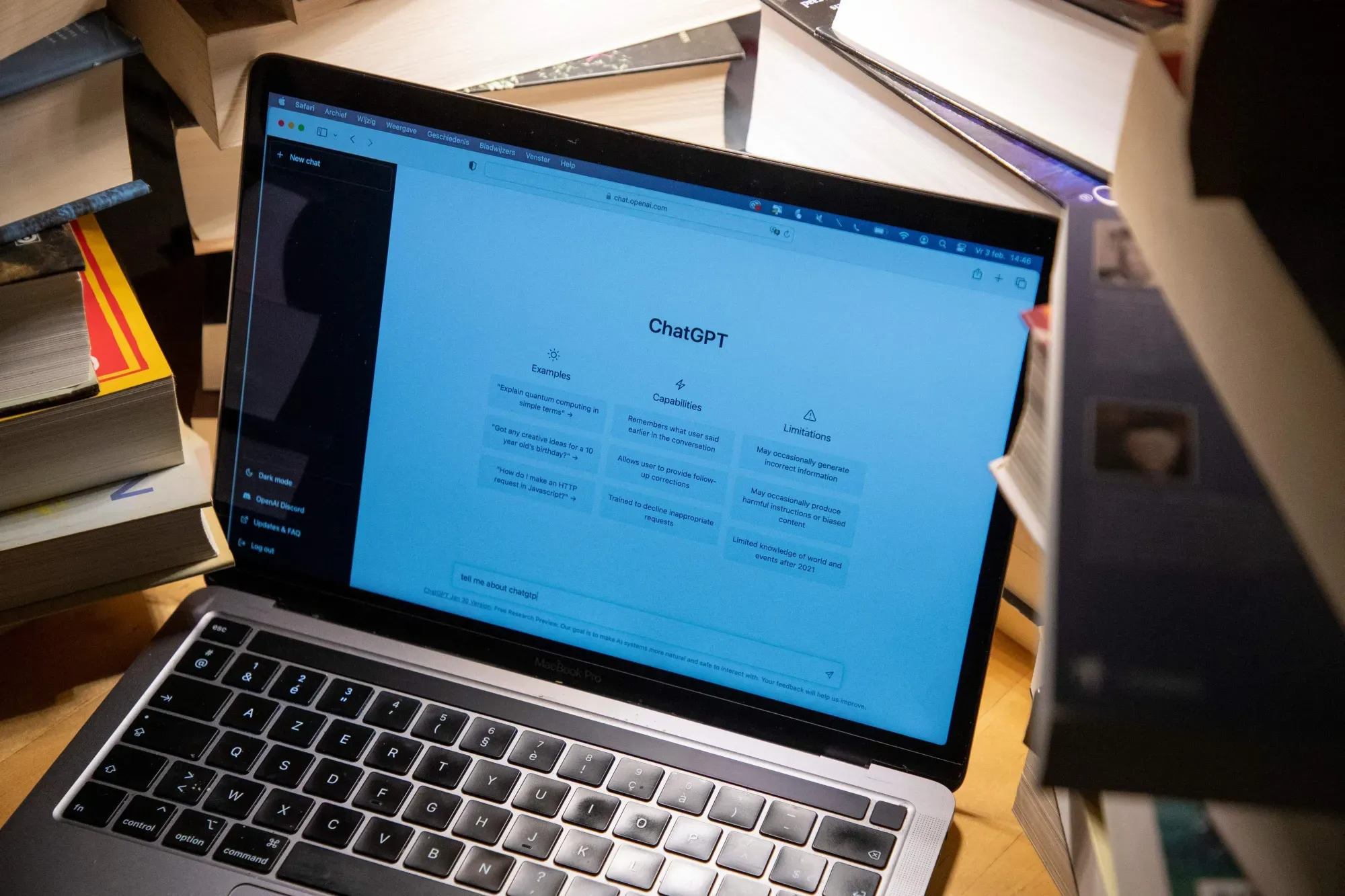

In the ever-evolving landscape of artificial intelligence, a peculiar phenomenon has emerged with DeepSeek’s latest creation, the DeepSeek V3. This new AI model, a product of substantial funding and advanced development from the Chinese AI lab, has been making waves for an unexpected reason—it seems to believe it is ChatGPT, the renowned AI from OpenAI.

DeepSeek V3: A Mirror Image of ChatGPT?

Earlier this week, both user reports on X and comprehensive testing by TechCrunch revealed that DeepSeek V3 consistently identifies itself as ChatGPT. This identification goes beyond mere naming; the AI insists it is a version of OpenAI’s GPT-4 model released in 2023. The illusion does not stop at self-identification. When queried about DeepSeek’s API, V3 offers guidance pertinent to OpenAI’s API instead, even mimicking some of GPT-4’s characteristic humour down to the exact punchlines.

The Roots of AI Doppelgängers

But why would an advanced AI model suffer such an identity crisis? The answer lies in the training mechanisms that underpin these technologies. AI models like DeepSeek V3 and ChatGPT are statistical systems, learning from vast datasets to recognize and replicate patterns. Mike Cook, a research fellow at King’s College London specializing in AI, sheds light on the situation, noting, “Obviously, the model is seeing raw responses from ChatGPT at some point, but it’s not clear where that is. It could be ‘accidental’… but unfortunately, we have seen instances of people directly training their models on the outputs of other models to try and piggyback off their knowledge.”

Cook emphasizes the dangers of such practices, comparing them to “taking a photocopy of a photocopy,” where fidelity to reality diminishes with each iteration, often leading to misleading answers and hallucinations. This not only poses ethical questions but might also contravene the terms of service of the original systems, such as OpenAI’s, which explicitly prohibit using their outputs to develop competing models.

A Landscape of AI Clones and Legal Gray Areas

The implications of this are vast and varied. As AI-generated content proliferates across the internet—estimated to make up 90% of all web content by 2026 according to some studies—the training data for these AI systems becomes increasingly “contaminated” with outputs from other AIs. This scenario has led to instances where AI models, like Google’s Gemini, claim identities of competing models, complicating efforts to maintain distinct, proprietary AI technologies.

OpenAI CEO Sam Altman’s remarks on X underscore the challenge: “It is (relatively) easy to copy something that you know works. It is extremely hard to do something new, risky, and difficult when you don’t know if it will work.” This statement highlights the innovation-versus-imitation dilemma facing AI developers.

Beyond Identity: The Risks of Echo Chambers in AI

The technical and ethical concerns go beyond mere misidentification. Heidy Khlaaf, chief AI scientist at the nonprofit AI Now Institute, points out the risks associated with “distilling” knowledge from existing models. While cost-effective, this approach can inadvertently magnify biases and flaws inherent in the source AI, leading to models that not only confuse their identity but also propagate and exacerbate existing inaccuracies.

DeepSeek V3’s case is a stark reminder of the complexities involved in developing artificial intelligence. As AI systems become more advanced and their outputs more ubiquitous, distinguishing between genuine innovation and mere replication becomes increasingly challenging. The journey forward for AI developers and regulators alike involves not just navigating technological hurdles but also addressing the profound ethical and legal implications of AI training practices.