In a twist that sounds like it’s straight out of a speculative fiction novel, a 42-year-old Tesla driver entangles himself in a legal web, claiming a foggy memory over an incident that resulted in the death of a pedestrian. This case has thrown a glaring spotlight on the complexities and controversies surrounding Tesla’s Autopilot feature, raising critical questions about accountability, technology, and the intersection of human and machine control on the open road.

The Incident: A Tragic Collision Under Question

Late one evening, Cathy Ann Donovan, a 56-year-old woman, was walking her dog along a highway when she became the victim of a hit-and-run. The suspected vehicle, a gray 2022 Tesla Model X, was linked to the scene by a combination of surveillance footage, cellphone records, and a trail of physical evidence including a windshield wiper found near Donovan’s body.

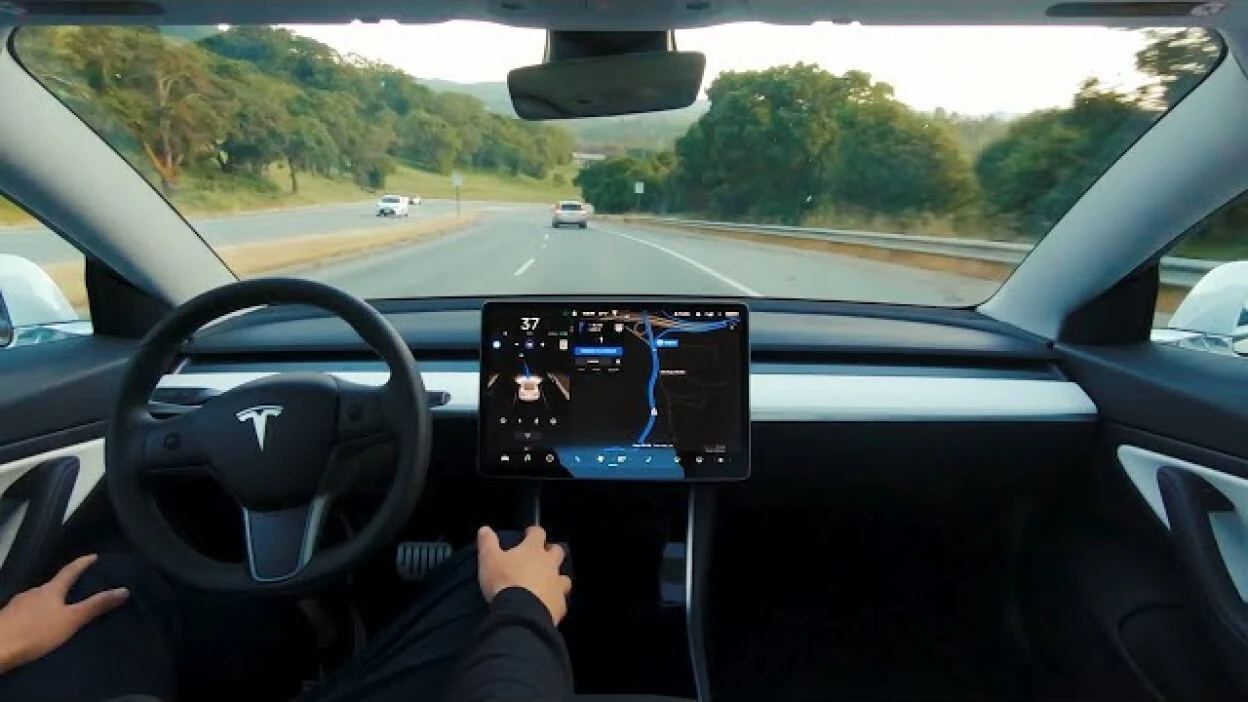

Despite the mounting evidence, the Tesla driver’s recollection of the event remains mysteriously vague, attributing any potential involvement to the car’s Autopilot mode and his distraction by work emails.

The Defense: Autopilot as a Legal Gray Area

David Risk, the attorney representing the Tesla driver, has adopted a defense strategy that could only exist in our current technological era. He suggests that if his client was involved in the fatal accident, it was while the car operated under Tesla’s full self-driving capability.

This defense brings to light the legal and ethical quagmires presented by semi-autonomous driving systems. It begs the question: when a car is on Autopilot, who is at fault in the event of a tragedy—the driver, engrossed in their emails, or the vehicle manufacturer, for creating a system that perhaps promises more than it can deliver?

FSD: Police Edition@elonmusk @Tesla_AI @aelluswamy. What about a special FSD version for law enforcement?

More Details below: pic.twitter.com/ieVV00Qy91

— FSD Dreams (@FSDdreams) February 16, 2024

Tesla and the Autopilot Controversy

Tesla, led by the enigmatic Elon Musk, has been at the forefront of pushing the boundaries of automotive technology. However, the company’s ambitious claims about its vehicles’ self-driving capabilities have often outpaced reality.

Despite the bold marketing, Tesla’s website includes a disclaimer reminding drivers that they must remain engaged and ready to take control at any moment, highlighting a significant gap between the perception and the operational reality of Autopilot.

The National Highway Traffic Safety Administration’s ongoing investigation into Tesla, spurred by a series of accidents involving Teslas crashing into emergency response vehicles, underscores the urgent need for clarity and regulation in the realm of autonomous driving.

With at least 736 crashes in the US involving Tesla’s Autopilot feature since 2019, including 17 fatalities, the debate over the safety and reliability of these systems is more critical than ever.

Looking Ahead: The Road to Accountability

As this case unfolds, it serves as a stark reminder of the challenges and responsibilities that come with the integration of advanced technology into everyday life. The “Autopilot Alibi” raises important ethical questions about driver responsibility and the reliance on semi-autonomous systems.

It forces us to confront the reality that, in the pursuit of innovation, we must not lose sight of the need for vigilance, accountability, and, above all, safety on our roads.

This incident not only reflects on Tesla but also on the broader automotive industry and regulatory bodies. It highlights the necessity for clear guidelines, rigorous testing, and transparent communication about the capabilities and limitations of driver assistance technologies.

As we navigate the future of transportation, the balance between human oversight and technological advancement remains a pivotal and ongoing journey.