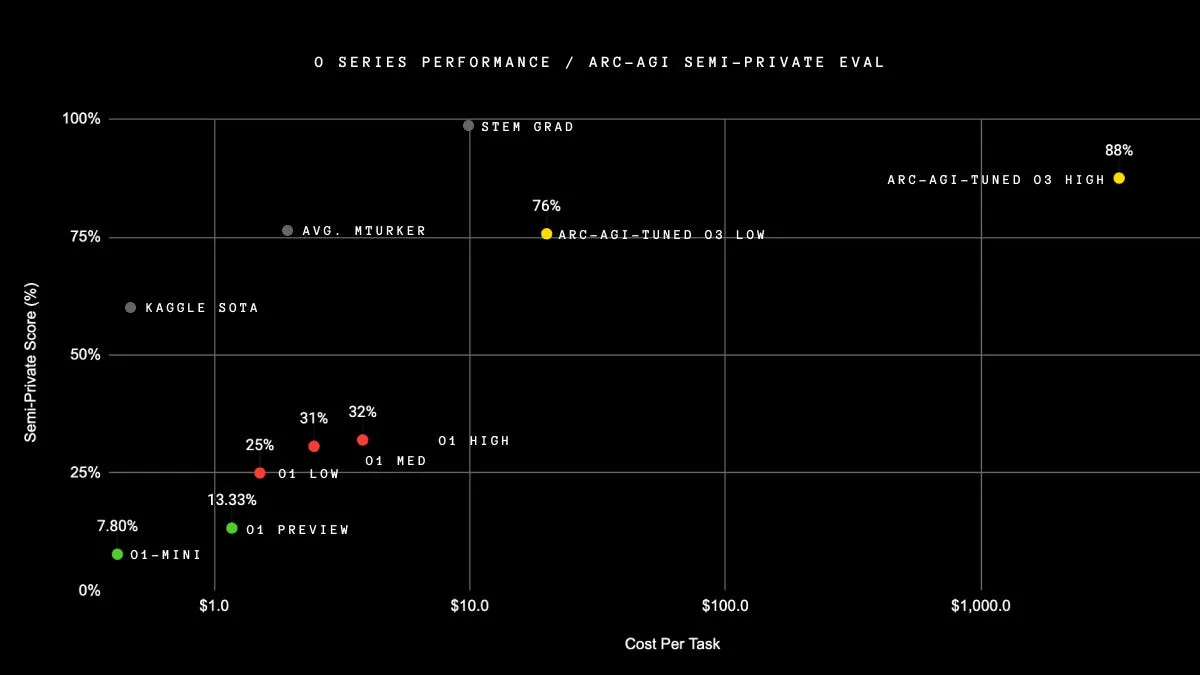

When OpenAI unveiled its o3 AI model in December, the company boasted impressive claims about its performance, particularly on the FrontierMath benchmark—a notoriously difficult set of math problems. OpenAI’s Mark Chen, the company’s chief research officer, made a bold statement, claiming that the o3 model could answer over 25% of FrontierMath questions correctly, leaving competitors in the dust. This score, he said, was far superior to other offerings on the market, which were barely able to answer 2% of the same questions.

“We’re seeing [internally], with o3 in aggressive test-time compute settings, we’re able to get over 25%,” Chen said during a livestream in December, raising expectations that the o3 model would be a game-changer in the AI space.

However, recent results from an independent benchmark test have cast doubt on the initial claims made by OpenAI. Epoch AI, a research institute behind FrontierMath, released its own evaluation of the o3 model and found that it only scored around 10% on the same set of problems, far below the company’s original claims.

While these results don’t necessarily suggest that OpenAI was deceptive, they do highlight a significant discrepancy between the company’s published results and third-party findings. According to Epoch, the lower score could be attributed to differences in testing setups, including the computing power behind the model and the specific subset of FrontierMath problems used. Epoch also noted that OpenAI’s initial results were based on a version of the o3 model that was more powerful, likely leveraging greater computing resources.

OpenAI Defends Its Model, Citing Optimizations for Real-World Use

Despite the discrepancy in benchmark results, OpenAI has defended its o3 model, explaining that the public release of the model was optimized for real-world use cases rather than pure benchmark performance. In a recent livestream, Wenda Zhou, a technical staff member at OpenAI, clarified that the version of o3 released to the public was not the same as the one demoed in December.

“The o3 in production is more optimized for real-world use cases and speed,” Zhou explained, emphasizing that the model’s efficiency and practical applications were prioritized over its benchmark scores. He acknowledged that this could lead to some disparities in test results, but insisted that the public release was still a substantial improvement.

“We still think that this is a much better model,” Zhou added. “You won’t have to wait as long when you’re asking for an answer, which is a real thing with these [types of] models.” This statement underscores OpenAI’s focus on delivering a model that is faster and more cost-effective for everyday use, even if it means sacrificing some benchmark performance.

Is OpenAI’s Transparency in Question?

The revelation of the o3 model’s lower-than-expected benchmark performance raises important questions about transparency in AI development. While OpenAI did publish benchmark results showing a lower-bound score of around 10%, its initial claims were based on an optimized version of the model with more computing power. This raises concerns about whether the company’s public communications adequately represented the capabilities of the model as it was released.

Epoch AI’s findings suggest that OpenAI may have tested the model under more favorable conditions, leading to inflated expectations among the public. Additionally, a post from the ARC Prize Foundation, an organization that tested a pre-release version of o3, corroborated this suspicion, noting that the public release of the model was smaller and less powerful than the version initially benchmarked.

“All released o3 compute tiers are smaller than the version we [benchmarked],” ARC Prize wrote. This highlights the discrepancy between what was promised and what was ultimately delivered, raising concerns about how AI models are marketed and tested.

Benchmarking in the AI Industry: A Growing Controversy

The controversy surrounding OpenAI’s o3 model is part of a larger trend in the AI industry, where benchmark scores are increasingly being scrutinized for their accuracy and transparency. Just this year, other major AI players have faced criticism for allegedly misleading benchmark results. For example, Elon Musk’s xAI was accused of publishing inaccurate benchmark charts for its Grok 3 model, while Meta admitted to touting benchmark scores for a version of its model that differed from the one made available to developers.

This pattern of benchmark controversies underscores the challenges of assessing AI performance. As AI models become more complex and powerful, the line between marketing and reality becomes increasingly blurred, making it harder for consumers and researchers to evaluate their true capabilities.

What’s Next for OpenAI’s o3 Model?

Despite the benchmark controversy, OpenAI is already planning to release a more powerful version of the o3 model in the coming weeks. The upcoming o3-pro model is expected to outperform the current public release of o3, offering more computing power and potentially addressing some of the performance gaps observed in benchmark tests.

In the meantime, OpenAI’s other models, including o3-mini-high and o4-mini, are already outperforming the public release of o3 on FrontierMath. These models, which are still in the testing phase, may offer a glimpse into the future of OpenAI’s AI offerings, suggesting that the company is constantly pushing the boundaries of what’s possible with AI technology.

As the AI industry continues to evolve, so too will the debate over benchmarking practices. OpenAI’s o3 model has become the latest case study in the complexities of evaluating AI performance, raising important questions about transparency and the role of benchmarks in shaping public perceptions of artificial intelligence.

In conclusion, while OpenAI’s o3 model may have fallen short of its initial promises, it is clear that the company is committed to optimizing the model for real-world applications. As the AI industry grapples with benchmarking issues, it will be important for companies like OpenAI to prioritize transparency and honesty in their communications, ensuring that expectations align with the capabilities of the models they release.