In the race to build practical quantum computers, error correction stands as one of the most critical challenges facing the field. A recent update from Google Quantum AI has shown promising advancements using their surface code technology to significantly reduce errors in quantum computations. However, IBM is not far behind with its innovative QLDPC code, which promises similar results with considerably fewer qubits.

Google’s Surface Code Achieves Breakthrough in Error Reduction

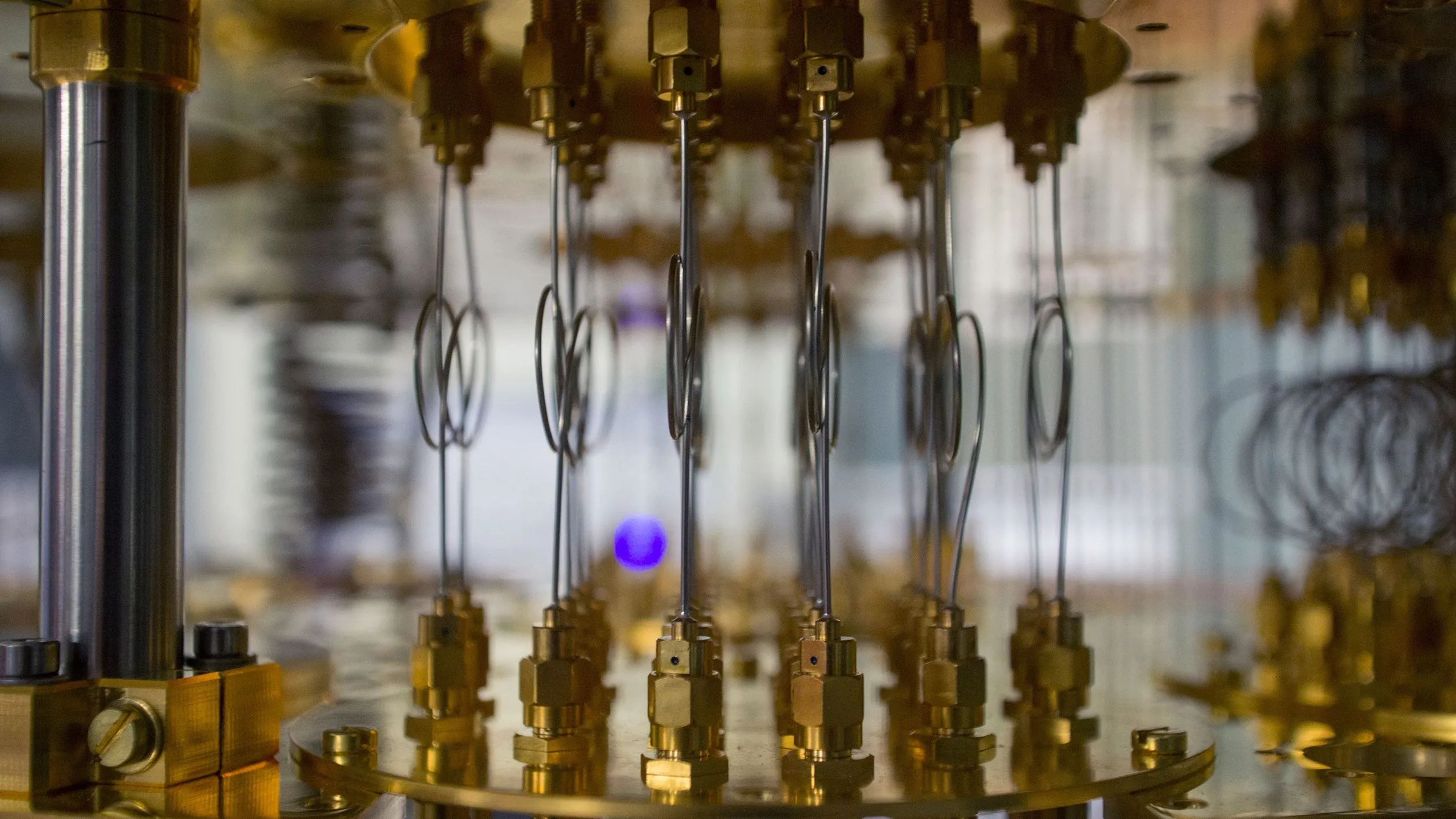

Google’s quantum processor, Willow, has recently made significant strides in error correction, a crucial aspect of quantum computing performance. Their surface code approach, a well-established error correction method, groups physical qubits into “logical qubits” to protect calculations from errors effectively. This technique has been a staple in quantum computing for over two decades, praised for its robust theoretical framework and balance between performance and qubit connectivity.

In a recent study highlighted by New Scientist, Google Quantum AI’s team demonstrated the surface code’s ability to scale from a 3×3 grid to 5×5 and then to a 7×7 grid of physical qubits, reducing errors by a factor of two for each step of the way. “The surface code is well understood, with a well-studied theoretical framework. It offers a balance between performance and required qubit connectivity,” Sergio Boixo of Google Quantum AI reported.

IBM’s Innovative Approach with QLDPC

On the other side of the spectrum, IBM introduced a rival method in 2023 named QLDPC (quantum low-density parity-check) code. This technique uses a unique connectivity strategy where each qubit is connected to six others, allowing for mutual error monitoring. This method could potentially achieve the same error-correction capabilities as the surface code but would require far fewer qubits. For instance, where the surface code might need 4,000 qubits, the QLDPC could deliver equivalent performance with just 288 qubits.

“QLDPC’s lower qubit overhead is hard to compete with,” Joe Fitzsimons of Horizon Quantum told New Scientist. IBM has even tailored its quantum chips to support the connectivity demands of QLDPC, presenting a compelling case for its widespread adoption as the future of quantum error correction.

Balancing Act: Hardware Limitations vs. Software Innovations

The debate between the surface code and QLDPC underscores a broader challenge in quantum computing: the interplay between hardware capabilities and software strategies. While Google and IBM utilize superconducting qubits, which are somewhat restricted in how they can be connected, other technologies like ultracold-atom qubits might offer more flexibility.

“Maybe someone somewhere is working on a type of surface code that is really great, but right now there is competition [to the surface code],” said Yuval Boger of QuEra Computing. His team has been exploring various codes to optimize the utility of ultracold-atom qubits, pushing the boundaries of what’s possible in quantum computing.

The Future of Quantum Computing

As the quantum industry continues to evolve, the competition between different error correction methods highlights the crucial role of both quantum hardware limitations and software innovations. Researchers remain divided over which approach will ultimately pave the way for practical and scalable quantum computing. However, both Google’s surface code and IBM’s QLDPC offer compelling solutions to the complex problem of quantum error correction.

In the end, the race is not just about which code can reduce more errors but about which can integrate more seamlessly with the evolving landscape of quantum technologies. As companies like Google and IBM continue to refine their approaches, the future of quantum computing looks both challenging and incredibly promising.