In a world increasingly powered by artificial intelligence, distinguishing between human and machine-generated content has become paramount. Google’s latest contribution to this field is its SynthID watermarking technology, designed to help developers and content creators verify the authenticity of text outputs. Recently made available as an open-source tool through the Google Responsible Generative AI Toolkit, SynthID represents a significant step towards responsible AI development.

The Mechanics of SynthID: How It Works

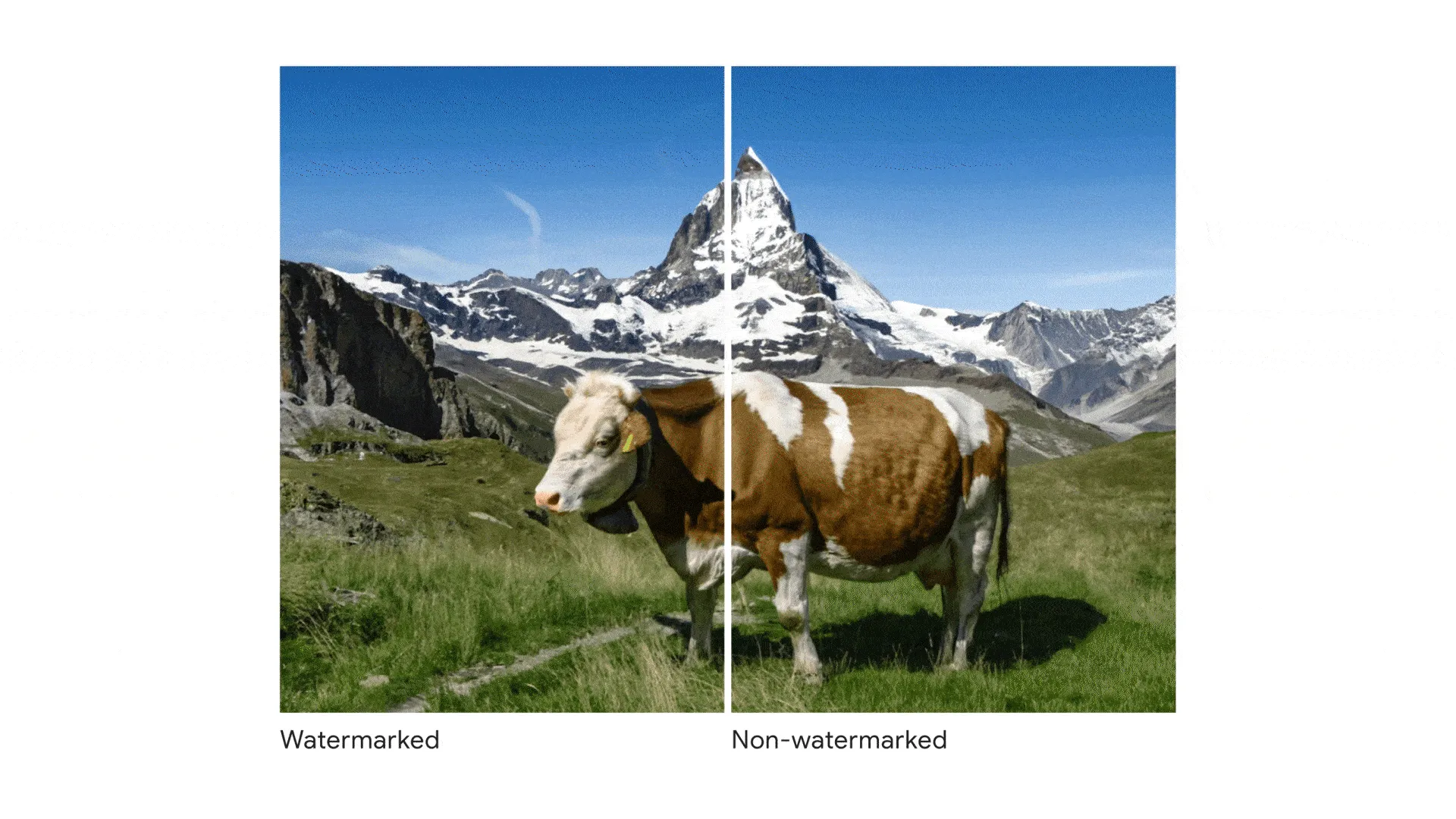

SynthID operates on a simple yet ingenious principle. Large Language Models (LLMs), like those used in AI content generation, produce text by predicting the next word or phrase based on previous input. Google’s SynthID subtly adjusts the probability scores of these predictions. For instance, in a sentence like “My favourite tropical fruits are __,” the LLM might predict “mango,” “lychee,” “papaya,” or “durian.” SynthID tweaks these probabilities to embed a unique, invisible watermark in the text output.

This watermark is composed of the altered probability scores throughout the text, creating a pattern that software can detect but remains imperceptible to humans. Despite concerns about watermarking affecting content quality, Google assures that SynthID maintains the original’s creativity, accuracy, and speed.

Why Watermarking AI Content Matters

The need for such technologies has never been more urgent. As AI tools become more sophisticated, their applications have expanded into sensitive areas including political discourse and personal privacy. Misuse of AI-generated content to spread misinformation or generate non-consensual material is a growing concern. In response, regions like California and China are already moving towards making AI watermarking a legal requirement. Pushmeet Kohli, Vice President of Research at Google DeepMind, highlighted the tool’s significance in a statement to MIT Technology Review, saying, “Now, other AI developers will be able to use this technology to help them detect whether text outputs have come from their own models, making it easier for more developers to build AI responsibly.”

The Limitations and Future of SynthID

While SynthID marks a promising advancement in AI technology, it is not without its limitations. The tool is less effective with shorter texts or content that has been heavily edited, paraphrased, or translated. Furthermore, it may struggle with straightforward factual responses. Despite these challenges, Google views SynthID as a critical component of a broader strategy aimed at developing more reliable AI identification tools.

In their blog post earlier this year, Google acknowledged the complexities of AI content verification, stating, “SynthID isn’t a silver bullet for identifying AI-generated content, but it is an important building block for developing more reliable AI identification tools and can help millions of people make informed decisions about how they interact with AI-generated content.”

Google’s open sourcing of SynthID is more than just a technical achievement; it is a commitment to transparency and ethical responsibility in AI development. By providing developers across the globe with the tools to detect AI-generated text, Google is setting a precedent for the future of AI technology—one that values integrity just as much as innovation. As AI continues to permeate every aspect of our digital lives, initiatives like these are crucial for ensuring a trustworthy and secure technological environment.