In an era where digital content is consumed and forgotten at breakneck speeds, the integrity of visual media has become an essential concern. As part of its commitment to transparency, Google has announced a significant update to the Google Photos app, which aims to make it clearer when images have been altered using its AI-powered tools. Despite the enhancements, questions remain about the effectiveness of these measures in helping users identify AI-edited content at a glance.

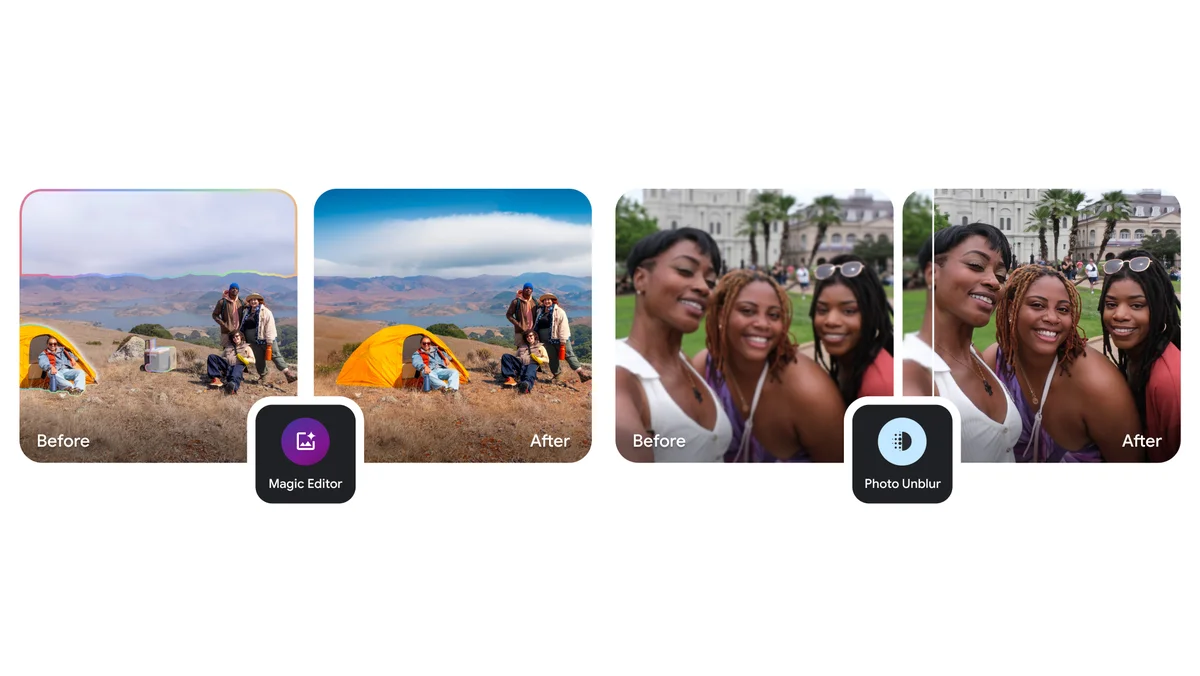

Starting next week, Google Photos users will encounter a new feature: a disclosure located in the “Details” section of each photo, indicating whether the image has been modified using Google’s artificial intelligence technology, such as Magic Editor, Magic Eraser, and Zoom Enhance. This move comes on the heels of the recent launch of the Pixel 9 phones, loaded with advanced AI photo-editing capabilities, which themselves were a response to the increasing scrutiny over the lack of transparency in digital media alterations.

Unpacking Google’s Disclosure Strategy

Despite these advancements, the new disclosure method isn’t without its critics. The main contention lies in the subtlety of the disclosures; they are tucked away at the bottom of the photo’s details, far from immediate visibility. Michael Marconi, Google Photos’ communications manager, acknowledged the ongoing nature of this initiative, stating, “This work is not done. We’ll continue gathering feedback, enhancing and refining our safeguards, and evaluating additional solutions to add more transparency around generative AI edits.”

The concern extends beyond just the placement of disclosures. The current strategy does not include visual watermarks within the photo’s frame—a more direct method of indicating AI involvement that users could easily see without needing to search for details. This absence can lead to misinformation, especially when AI-edited images are shared on social media or other platforms without the accompanying metadata.

The Challenge of Maintaining Integrity in Digital Media

The integration of AI tools in photo editing has been met with mixed reactions. While these features can dramatically enhance photo quality and composition, they also raise significant ethical questions about the authenticity of digital imagery. The potential for AI to create synthetic content that blurs the line between real and fabricated can have profound implications, particularly in the context of news and historical documentation.

Google’s approach to this issue—embedding disclosures in metadata and now, more visibly, within the app’s UI—reflects a broader industry trend. Platforms like Facebook and Instagram, operated by Meta, have already implemented systems to label AI-generated content, and Google plans to extend similar flags to AI images in its Search results later this year.

Future Directions and Industry Impact

As the technology evolves, so too must the mechanisms for ensuring digital authenticity. The ongoing debate highlights a crucial need for industry standards that can reliably denote AI involvement in content creation. While metadata tags and in-app disclosures are steps in the right direction, the effectiveness of these measures will ultimately depend on user awareness and the ability of platforms to enforce these standards consistently.

In conclusion, Google’s latest updates represent a pivotal moment in the discourse surrounding digital media ethics. As AI continues to transform the landscape of photo editing, the challenge for tech giants lies not just in developing new features but in fostering an environment where transparency is paramount. This balance of innovation and integrity will likely shape the future of how we consume and trust digital media.